The anti nuclear campaigner and physician Dr. Helen Caldicott organized a two day symposium in February 2015. She had an international panel of leading experts in disarmament, political science, existential risk, anthropology, medicine, nuclear weapons, and artificial intelligence. MIT professor Noam Chomsky spoke on nuclear weapons as, “A Pathology That Could Yield to Catastrophe if Not Cured.” His presence—or absence at public events—usually makes a huge difference. Media, such as Democracy Now, came for his talk but did not stay for the other 20 plus speakers. It appears that the topic was just too scary for many.

What they missed was a huge offering of information and related questions on Artificial Intelligence and the Risk of Accidental Nuclear War, on recently proven facts about a global nuclear winter that can be caused by the unleashing of just a few nuclear weapons, the expanding Militarization of Space, the Power and Pathology of the US Military Industrial Complex, privatization of the US Nuclear Weapons Labs, nuclear war crimes in the Marshall Islands, as well as two of the vibrant movements to abolish nuclear weapons, a divestment effort under the title Don’t Bank on the Bomb as well as ICAN, the International Campaign to Abolish Nuclear Weapons.

Efforts to abolish nuclear weapons and war began before the atom bombs were even built. A few of the scientists who went on to work on the Manhattan project recognized the unheard of explosive potential of atomic weapons and the risks of spreading radiation. In a TUC Radio program about the first nuclear chain reaction the historian Iain Boal mentioned Leo Szilard who witnessed the experiment by Enrico Fermi in an abandoned racquet court in Chicago on December second, 1942. Iain Boal:

Leo Szilard was on that balcony that day. It was very, very cold and they could see their breath. And they were standing there with a bottle of Chianti. And it was Szilard who in 1933 in London, as he walked across Southampton Road and the world cracked open, had been the first to consider at that moment how it might be possible to set up a nuclear chain reaction and liberate energy on an industrial scale and to make a bomb. And he stayed behind on the racquets court. There was crowd there, he said later, and then Fermi and I stayed there alone. I shook hands with Fermi and I said I thought this day would go down as a black day in the history of mankind.

Leo Szilard later said that when he crossed Southampton Road in 1933 he suddenly knew in a flash of recognition that by his invention universal death might come into the world. The Hungarian American physicist was the first to conceive of the nuclear chain reaction and he patented the idea of a nuclear reactor along with Enrico Fermi.

Szilard participated in the Manhattan Project, but tried by all means available to him to convince US President Truman not to use atomic weapons on Japan. Szilard urged US policy-makers to demonstrate the power of these weapons to leaders of the world by exploding an atomic device in an uninhabited area.

Here is President Truman on August 6, 1945:

A short time ago an American aeroplane dropped one bomb on Hiroshima and destroyed its usefulness to the enemy. That bomb has more power than 20,000 tons of TNT. The Japanese began the war from the air at Pearl Harbor. They have been repaid many fold. And the end is not yet.

With this bomb we have now added a new & revolutionary increase in destruction to supplement the growing power of our armed forces. In their present form these bombs are now in production and even more powerful forms are in development.

It is an atomic bomb. It is a harnessing of the basic power of the Universe. The force from which the sun draws its power has been loosed against those who brought war to the Far East.

We are now prepared to destroy more rapidly and completely every productive enterprise the Japanese have in any city.... Let there be no mistake: we shall completely destroy Japan’s power to make war....

We have spent more than 2 billion dollars on the greatest scientific gamble in history. And we have won.... What has been done is the greatest achievement of organized science in history.

That was President Truman in a clip from American History TV.

Albert Einstein, whose work in physics also contributed to the discovery of nuclear fusion and fission initially supported, but never participated, in the Manhattan Project. After seeing what the bombs did in Hiroshima and Nagasaki, he became one of the eloquent critics of nuclear weapons and until the day he died raised the philosophical and ethical issues of the atomic age.

His most often used quote is: “The unleashed power of the atom has changed everything save our modes of thinking and we thus drift towards unparalleled catastrophe.” Einstein explained that further by saying: “We cannot solve our problems with the same thinking we used when we created them.” “It has become appallingly obvious that our technology has exceeded our humanity.”

Einstein also made an appeal for activism when he said: “The world is a dangerous place to live; not because of the people who are evil, but because of the people who don’t do anything about it.”

The global danger inherent in nuclear weapons has increased exponentially with the advent of computers and more recently of AI – artificial intelligence.

The Union of Concerned Scientists describe the current status of nuclear weapons. They are on Hair Trigger Alert. That’s a U.S. military policy that enables the rapid launch of nuclear weapons. Missiles on hair-trigger are maintained in a ready-for-launch status, staffed by around-the-clock launch crews, and can be airborne in a matter of minutes. By keeping land-based missiles on hair-trigger alert—and nuclear-armed bombers ready for take off—the United States could launch vulnerable weapons before they were hit by incoming Russian warheads.

Computers are involved at all stages of this process: in observation of a launch against the US, in evaluating the data, in guidance systems for nuclear tipped rockets, etc. There is already a long list of false alerts in which computer data were involved. In spite of that the tendency is to use more and more computers in the hair trigger alert system and even to automate responses.

Actually the entire February 2015 conference on The Dynamics of Possible Nuclear Extinction was inspired by the new, expanding debate about the risks of computerized artificial intelligence by some of todays most respected physicists, computer scientists, and inventors.

Here is what Helen Caldicott had to say in her opening remarks:

I set up this conference, basically, because I read an article in The Atlantic Monthly some months ago quoting Stephen Hawking ... and Max Tegmark who is one of our speakers ...

And they were talking about artificial intelligence and it’s moving very, very fast at the moment. Elon Musk talks about that too. And they’re very worried about it. And yet they can’t seem to stop themselves keeping going. It said that within about 10 or 20 years, but maybe shorter, that computers will be autonomous, that they may be able to reproduce themselves. That you can’t—I’m saying this—program morality or conscience into a computer. And that they’re worried that computers themselves could initiate a nuclear war.

When I read that I thought, My God, as if things aren’t bad enough with the United States and Russia militarily confronting each other now for the first time since the Cold War. Computers could take over our world in that way.

So that’s why I set the conference up.

After hearing that, I found that connection with Artificial Intelligence so intriguing that I retraced the steps Helen Caldicott took from inspiration to the opening of her Symposium in February 2015.

It all began with a short article in the Huffington Post on April 19, 2014, entitled: “Transcending Complacency on Superintelligent Machines.”

That article received very little attention, even though the four co-authors include famous names such as Nobel Laureate and Director of Research at the Centre for Theoretical Physics at Cambridge, Stephen Hawking, and even though the article was an introduction of sorts to four think tanks with thought provoking names such as the Cambridge Center for Existential Risk, the Future of Humanity Institute, the Machine Intelligence Research Institute, and the Future of Life Institute.

Stephen Hawking, Berkeley computer science professor Stuart Russell, Max Tegmark, and Frank Wilczek, both physics professors at M.I.T. wrote the following:

Artificial intelligence (AI) research is now progressing rapidly. Recent landmarks ... as self-driving cars, a computer winning at Jeopardy!, and the digital personal assistants Siri, Google Now and Cortana are merely symptoms of an IT arms race fueled by unprecedented investments ...

The potential benefits are huge; everything that civilization has to offer is a product of human intelligence; we cannot predict what we might achieve when this intelligence is magnified by the tools AI may provide ... Success in creating AI would be the biggest event in human history.

Unfortunately, it might also be the last, unless we learn how to avoid the risks. In the near term, for example, world militaries are considering autonomous weapon systems that can choose and eliminate their own targets; the UN and Human Rights Watch have advocated a treaty banning such weapons....

Looking further ahead, there are no fundamental limits to what can be achieved: there is no physical law precluding particles from being organized in ways that perform even more advanced computations than the arrangements of particles in human brains.... One can imagine such technology outsmarting financial markets, out-inventing human researchers, out-manipulating human leaders, and developing weapons we cannot even understand. Whereas the short-term impact of AI depends on who controls it, the long-term impact depends on whether it can be controlled at all.

So, facing possible futures of incalculable benefits and risks, the experts are surely doing everything possible to ensure the best outcome, right? Wrong. If a superior alien civilization sent us a text message saying, “We’ll arrive in a few decades,” would we just reply, “OK, call us when you get here—we’ll leave the lights on”? Probably not—but this is more or less what is happening with AI. Although we are facing potentially the best or worst thing ever to happen to humanity, little serious research is devoted to these issues outside small non-profit institutes ... All of us—not only scientists, industrialists and generals—should ask ourselves what can we do now to improve the chances of reaping the benefits and avoiding the risks [of artificial intelligence].

When this article in the Huffington Post was ignored, reprinted in the Guardian and again ignored, the journalist James Hamblin did an expanded follow up in The Atlantic. Hamblin chose the title, “But What Would the End of Humanity Mean for Me?” and started out: “Preeminent scientists are warning about serious threats to human life in the not-distant future, including climate change and superintelligent computers. Most people don’t care.”

Hamblin checked the record of MIT Physicist Max Tegmark and found that “[t]he single existential risk that Tegmark worries about most is unfriendly artificial intelligence.... when computers are able to start improving themselves, there will be a rapid increase in their capacities, and then, Tegmark says, it’s very difficult to predict what will happen.”

Tegmark told Lex Berko at Motherboard earlier in 2014, “I would guess there’s about a 60 percent chance that I’m not going to die of old age, but from some kind of human-caused calamity. Which ... suggest[s] that I should spend a significant portion of my time actually worrying about this. We should in society, as well.”

James Hamblin really wanted to know what all of this means in more concrete terms, so he asked about it in person as Tegmark was walking around the Pima Air and Space Museum in Tucson with his kids.

Tegmark said: “Longer term—and this might mean 10 years, it might mean 50 or 100 years, depending on who you ask—when computers can do everything we can do, ... they will probably very rapidly get vastly better than us at everything, and we’ll face this question we talked about in the Huffington Post article: whether there’s really a place for us after that, or not.” Tegmark then interrupted the interview to explain some of the Air and Space Museum displays to his children.

“This is very near-term stuff” he continued.

“Anyone who’s thinking about what their kids should study in high school or college should care a lot about this. The main reason people don’t act on these things is they’re not educated about them.”

Tegmark and his op-ed co-author Frank Wilczek, the Nobel laureate, draw examples of cold-war automated systems that assessed threats and resulted in false alarms and near misses. “In those instances some human intervened at the last moment and saved us from horrible consequences ... That might not happen in the future.” [said Wilczek]

He continued,

But I think a widely perceived issue is when intelligent entities start to take on a life of their own. They revolutionized the way we understand chess, for instance. That’s pretty harmless. But one can imagine if they revolutionized the way we think about warfare or finance, either those entities themselves or the people that control them.”...

Automatic trading programs have already caused tremors in financial markets.... [Wilczek:] “[And I’m] particularly concerned about a subset of artificial intelligence: drone warriors.”...

Experts have wide-ranging estimates as to time scales [Hamblin writes]. Wilczek likens it to a storm cloud on the horizon. “It’s not clear how big the storm will be, or how long it’s going to take to get here. I don’t know. It might be 10 years before there’s a real problem. It might be 20, it might be 30. It might be five. But it’s certainly not too early to think about it, because the issues to address are only going to get more complex as the systems get more self-willed.”

These were excerpts from the article in The Atlantic by James Hamblin that Helen Caldicott took to heart. It inspired her to organize the Symposium on The Dynamics of Possible Nuclear Extinction in New York City in February 2015.

Stephen Hawking was unable to come. But MIT Physics Professor Max Tegmark gave his opinion on nuclear weapons and how the potential of near term extinction cuts off literally millions of years of a promising future for the earth and humanity.

[W]hat we’ve done with nuclear weapons is probably the dumbest thing we’ve ever done here on earth. I’m going to argue that it might also be the dumbest thing ever done in our universe....

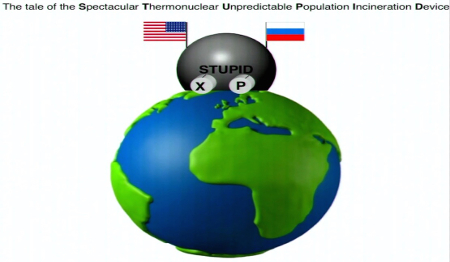

Here we are on this planet, and we humans have decided to build this device....

It’s called the Spectacular Thermonuclear Unpredictable Population Incineration Device. Okay, I’m a little bit inspired by Dr. Seuss here, I have to confess. This is a long mouthful so let’s just abbreviate it: S-T-U-P-I-D. Okay?

[I]t’s a very complicated device—it’s a bit like a Rube Goldberg machine inside. A very elaborate system. Nobody—there’s not a single person on the planet who actually understands how 100 percent of it works. Okay?....

[I]t was so complicated to build that it really took the talent and resources from more than one country, they worked really hard on it, for many, many years. And not just on the technical side—to invent the technology to be able to create what this device does. Namely, massive explosions around the planet.

But also to overcome a lot of human inhibitions towards doing just this. So this system actually involves also a lot of very clever social engineering where you put people in special uniforms and have a lot of peer pressure and you use all the latest social coercion technology to make people do things they otherwise normally wouldn’t do. Okay?....

And so a lot of clever thought has gone into building STUPID.

... It’s kind of remarkable that we went ahead and put so much effort into building it since actually, really, there’s almost nobody on this spinning ball in space who really wants it to ever get used ...

That’s MIT physics professor Max Tegmark. His fields are cosmology, quantum mechanics, and the math-physics link. He is the author Our Mathematical Universe and co-founder of the Future of Life Institute. You will hear more from him in this radio series.

In January 2015 the technology inventor Elon Musk, CEO of Tesla Electric Cars and SpaceX, decided to donate $10M to the Future of Life Institute to run a global research program aimed at keeping artificial intelligence beneficial to humanity. Elon Musk believes that with AI we are summoning the demon.

I think we should be very careful about artificial intelligence. If I were to guess at what our biggest existential threat is, it’s probably that. So we need to be very careful with artificial intelligence

Increasing [I’m] inclined to think that there should be some regulatory oversight at maybe the national and international level just to make sure that we don’t do something very foolish. I mean with artificial intelligence we are summoning the demon.

Elon Musk’s SpaceX program, that supplies the international space station, relies heavily on computers which gives him an insiders view. His warning should be of concern.

That was the opening of a mini series on The Dynamics of Possible Nuclear Extinction, a February 2015 Symposium organized by the Australian anti nuclear campaigner, author and physician Dr. Helen Caldicott.

Come back when TUC Radio returns for more on the human and technological factors that could precipitate a nuclear war, for the ongoing technological and financial developments relevant to the nuclear weapons arsenals, for news on the corporate marketing of nuclear technology and the underlying philosophical and political dynamics that have brought life on earth to the brink of extinction. And why MIT professor Noam Chomsky thinks that you should care.

You can hear this program again for free on TUC Radio’s website, www.tucradio.org. Look at the Newest Programs or the Podcast Page.

While you’re there you can subscribe to weekly, free podcasts. Downloads are free and we appreciate any size donation as sign of appreciation and to keep TUC Radio on the air. If you’re unable to download online, you can get an audio CD at cost by ordering on TUC Radio’s secure website, tucradio.org. For information call, 707-463-2654. Our e-mail address is, tuc@tucradio.org and the mailing address is, Post Office Box 44, Calpella, CA 95418.

My name is Maria Gilardin. Thank you for listening. Give us a call.

The Eight segments of this mini series are:

- The Dynamics of Possible Nuclear Extinction – Artificial Intelligence, ONE

- The Dynamics of Possible Nuclear Extinction – Noam Chomsky, TWO

- The Dynamics of Possible Nuclear Extinction – MIT Physicist Max Tegmark, THREE

- The Dynamics of Possible Nuclear Extinction – Nuclear Winter, FOUR

- The Dynamics of Possible Nuclear Extinction – Weapons in Space, FIVE

- The Dynamics of Possible Nuclear Extinction – The Deep State, SIX

- International Campaigns to Abolish Nuclear Weapons: Ray Acheson and Tim Wright

- Don’t Bank on the Bomb – The Nuclear Divestment Movement